What are .mp4 and .mov files?

.mp4 and .mov are standard video formats used by millions around the globe. Do you know how they differ?

If you are using a Mac, you must be familiar with .mov and Windows users - .mp4. While so many other video formats are available, .mp4 and .mov are standard and used by millions around the globe. But have you ever wondered what these formats are and how they differ?

Before diving in, let's first understand how audio and videos can be represented or stored in a format computer can understand.

Digital video

Videos are simply a series of images shown rapidly, one after the other, so the human eye perceives them as a motion (video).

Have you ever heard of the term "refresh rate" or "frames per second," short for fps? If someone tells you that their device supports 30 or 60 fps, the device can show you up to 30 or 60 frames (images) per second. You would notice the difference in this motion very clearly if you are watching a video on your laptop vs. a television with a good refresh rate.

Since each video is a bunch of images, it all boils down to how one can store images on a computer — called pixels represented by, say, primary colors of RGB. Each pixel can be stored in 4 bytes of memory.

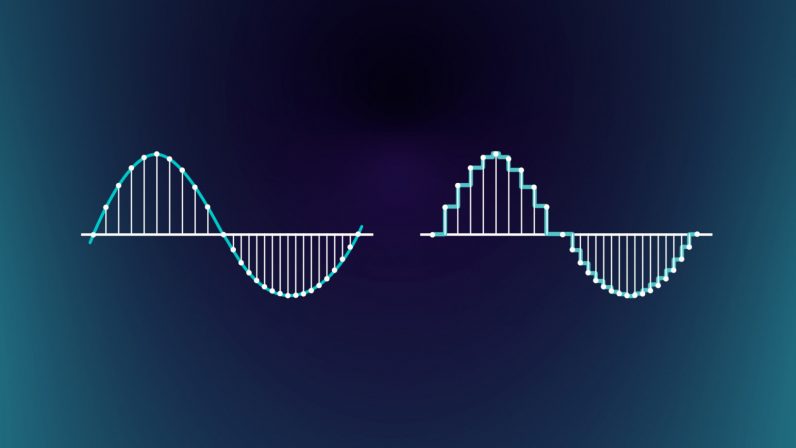

Digital audio

Audio is waves of pressure propagating through any medium like air, water, solid, etc. Our brain understands these waves, but you would want to store them somewhere at the end of the day.

Converting these waves into a digital format that computers can understand is tricky. A continuous wave is broken into samples at regular intervals, and each of these samples is quantized and stored. The sample rate is the number of times the audio is sampled per second. For example, audio sampled at 44.1kHz will have 44,100 data points stored in memory for each second.

A higher sample rate implies improved audio quality. Each sample can be stored in 2 bytes of memory. Limiting the scope of this blog, we won't be talking about how samples can be stored in 2 bytes.

Uncompressed videos are storage intensive

1080p, also known as Full HD or FHD (full high definition), is a ubiquitous display resolution of 1920 x 1080 pixels. Expanding on the MDN web docs, let's look at the storage needed for a 30 min. FHD video.

- A single frame of FHD video in full color (4 bytes per pixel) is

1920 x 1080 x 4 = 8,294,400 bytes (~8 MB). - At a typical 30 frames per second, each second of FHD video would occupy

8 MB x 30 = 240 MB. - A minute of FHD video would need

240 MB * 60 = 14,400 MB (~14 GB). - A 30-minute video conference would need about ~400 GB of storage, and a 2-hour movie would take almost ~1.6 TB.

- The above computation is without considering the audio content. A 30 min audio data sampled at 44.1 kHz would take about

44100 x 2 bytes x 30 min x 60 sec = 158,760,000 bytes (~ 158 MB)

A single uncompressed raw video might not even fit on your laptop. A second of such a video (~ 240 MB) is impossible to transfer over the internet for streaming.

Encoding using codecs

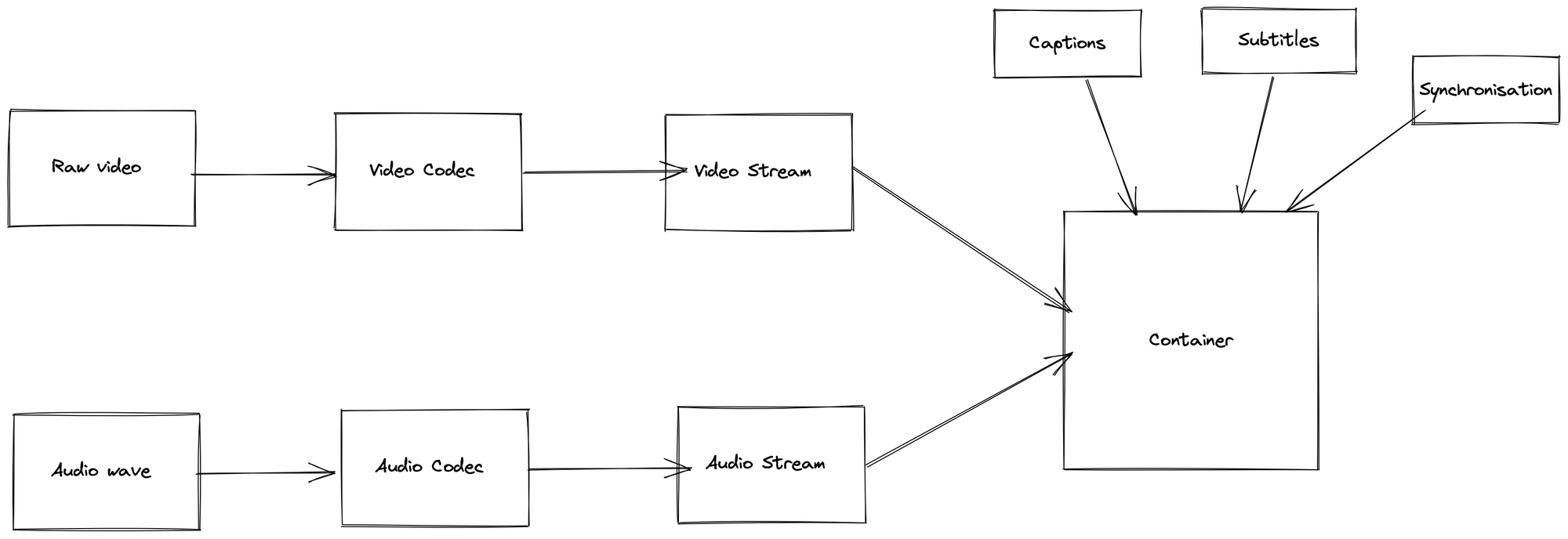

To address the above problem of videos needing an enormous amount of storage, we compress media using audio and video codecs.

- Codecs can be hardware or software programs.

- To compress, codecs use math to find patterns in media (audio/video) and replace all those patterns with references, reducing the file size significantly.

- Codecs can reduce the video size by up to 1000x, which means a 30 min uncompressed video (~400 GB) will now only need ~400 MB of storage.

- Codecs also help with uncompressing media at the time of playback.

The process of using a codec to compress a raw video is called encoding. It is the most time-consuming part of processing a video. It depends on many factors, like how much you want to compress and the initial size of the uncompressed video. Generally, a short video could take a few seconds, while a long video could take days, depending on the source video and the configuration of the Codec.

Containers (file types)

Codecs compress the media into individual audio and video streams. These streams, together with resources like captions, subtitles, and synchronization (used to make sure audio and video are in sync), are packaged into something called containers.

MPEG-4 (.mp4) and QuickTime (.mov) are some standard containers used widely. If you notice closely, these containers are used as file extensions too, which means most videos that you encounter daily with extensions like .mp4, .mov, etc., are already compressed. These video files are decompressed just at the time of playback.

Let’s look at some standard codecs supported by popular containers -

Video codecs

| Codec | Container Support |

|---|---|

| AVC (H.264) | MPEG-4, QuickTime |

| AV1 | MPEG-4 |

| H.263 | MPEG-4, QuickTime |

| MPEG-4 Part 2 Visual | MPEG-4, QuickTime |

Audio codecs

| Codec | Container Support |

|---|---|

| AAC | MPEG-4, QuickTime |

| Apple Lossless (ALAC) | QuickTime |

| FLAC | MPEG-4 |

| MPEG-1 Audio Layer III (MP3) | MPEG-4, QuickTime |

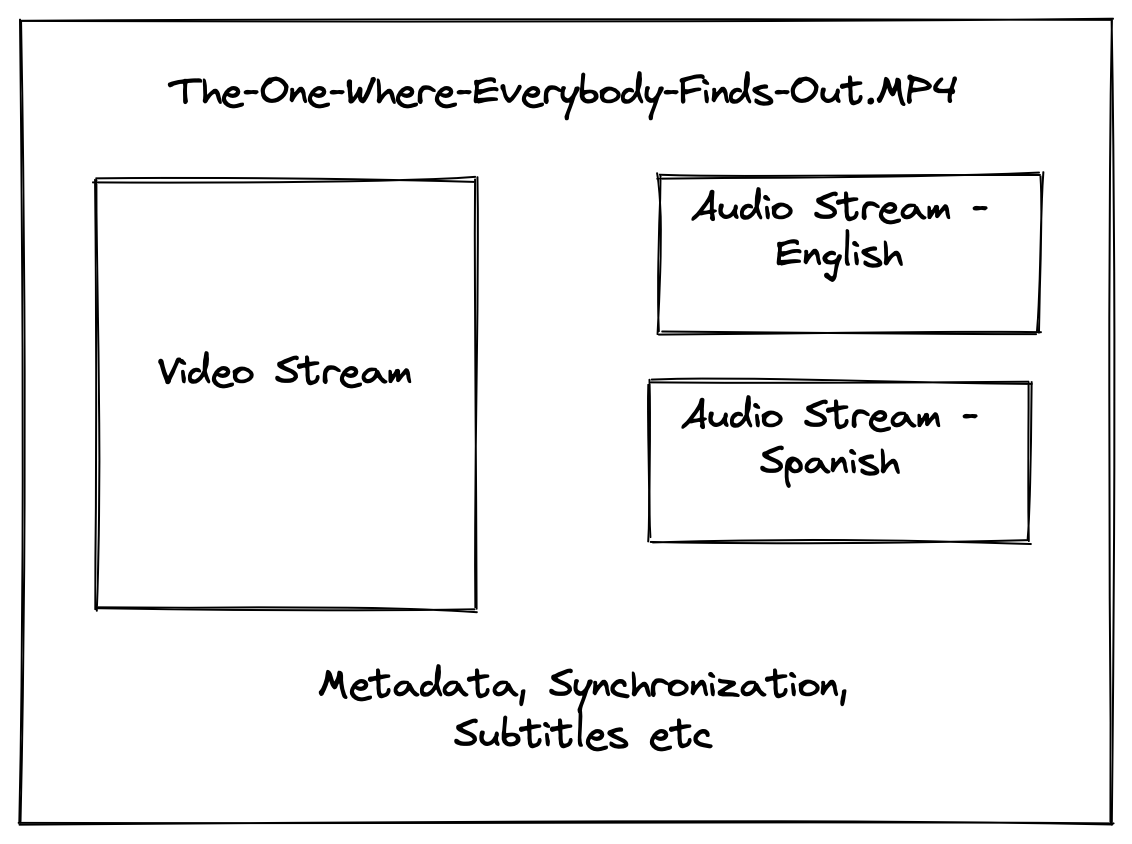

Any combination of the above video and audio codecs produces media streams supported by MPEG-4 or QuickTime containers. For now, think of them as algorithms developed to compress data. Check out the links in the above table for more on codecs.

A typical .mp4 file can be represented like this -

More on video processing coming soon?

Keep an eye out for the next blog in this series, where we talk in detail about how best, as a developer, you can optimize streaming to an end-user of your video application.

If you have any questions or want to know more about how we handle videos in Spiti, reach us on Twitter at @spitidotxyz or write to us at support@spiti.xyz.

Get your team started with Spiti